Emre Can AcikgozI am a PhD fellow in Computer Science at UIUC advised by Dilek Hakkani-Tur and Gokhan Tur. I work on Self-Evolving Agents. I was an Applied Scientist Intern at Amazon Alexa AI (Bellevue) in Summer 2025. I completed my MSc in Computer Science at Koc University, where I focused on Large Language Models and Post-Training under the supervision of Deniz Yuret. Previously, I earned my BSc in Electrical and Electronics Engineering (AI focus) from the same institution, during which I also worked with Deniz Yuret on supervised and unsupervised morphological analysis. If you want to discuss your research or seek for an advice/feedback (non-industrial), feel free to schedule a short chat-meeting. Email / GitHub / HuggingFace / Google Scholar / LinkedIn |

|

Research

My research focuses on Self-Evolving Agents and Agentic-Learning. My high-level goal is to build agents that can continuously learn from few-samples, with minimal updates; regardles of the task, domain, and environment.

|

2025 |

arXiv

|

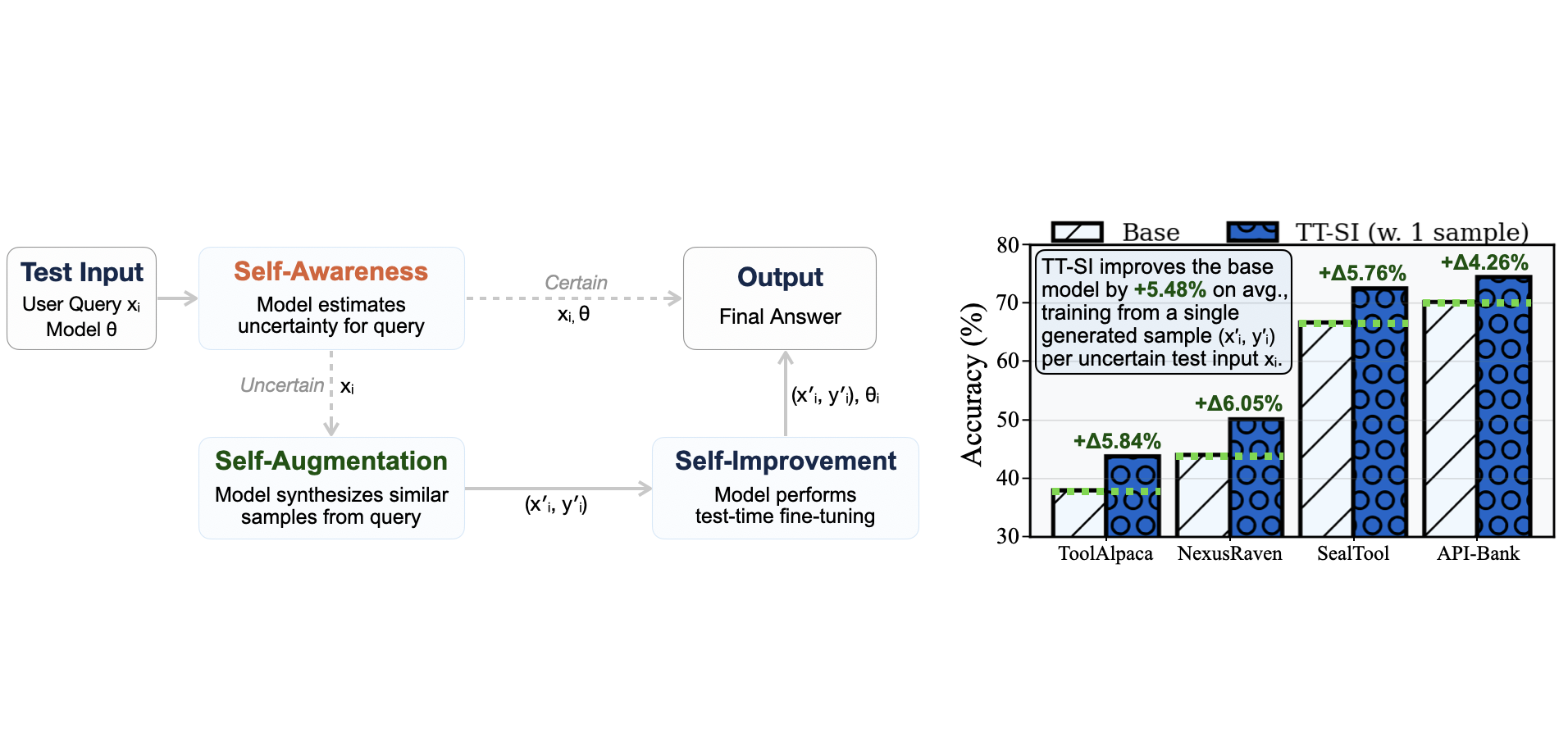

Self-Improving LLM Agents at Test-Time

Emre Can Acikgoz*, Cheng Qian, Heng Ji, Dilek Hakkani-Tür, Gokhan Tur arXiv, 2025 arxiv Consider an LLM Agent that could train itself while testing. What if it could also sense its own weaknesses and use them at test-time training? Motivated by psychological theories of human learning, we investigate a new test-time self-improvement (TT-SI) algorithm that enables agents to self-improve using only one training instance per uncertain case during inference. Self-Awareness → Self-Data Augmentation → Self-Improvement, all at test-time! |

IWSDS 2026

|

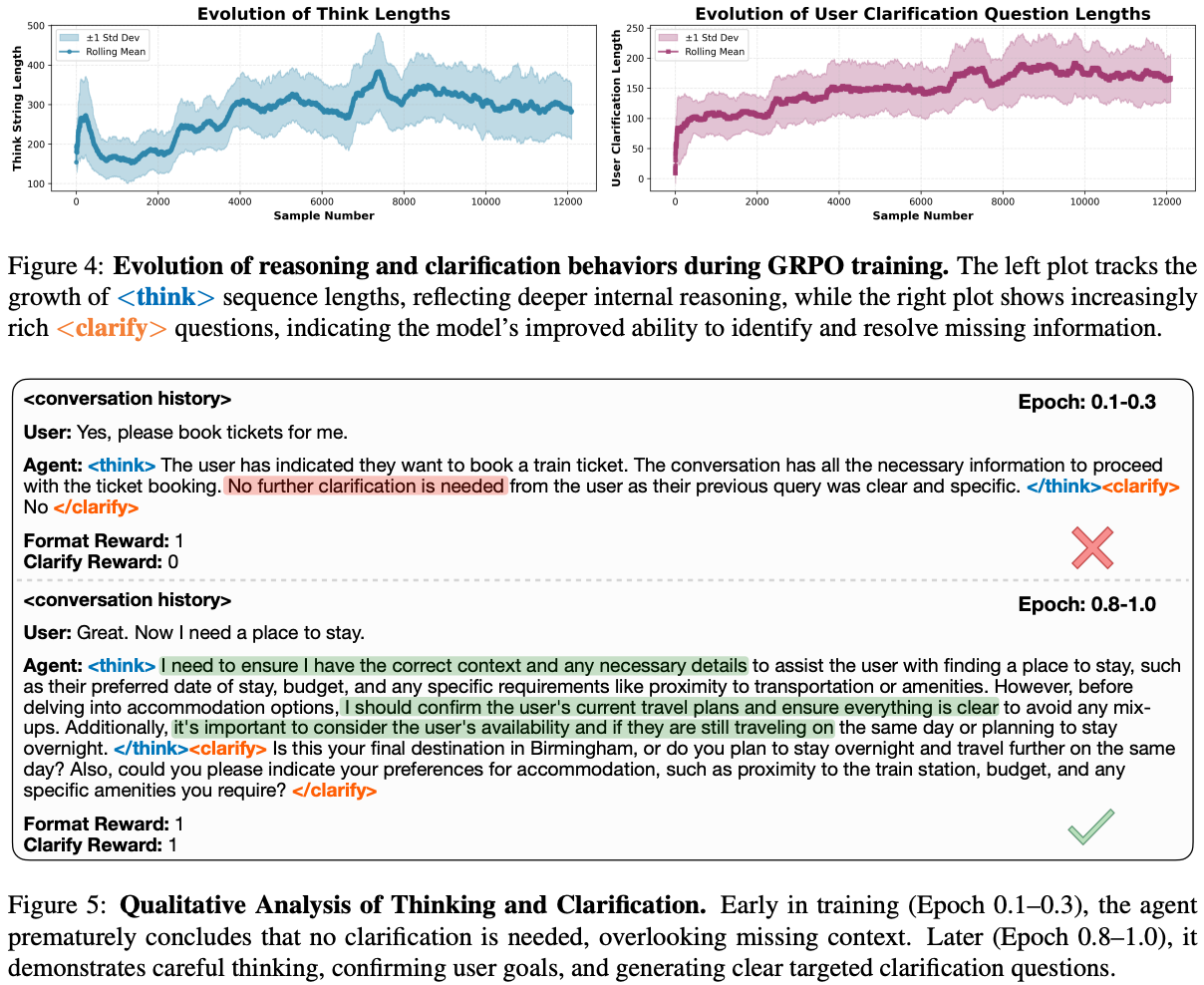

SpeakRL: Synergizing Reasoning, Speaking, and Acting in Language Models with Reinforcement Learning

Emre Can Acikgoz*, Jinoh Oh, Jie Hao, Joo Hyuk Jeon, Heng Ji, Dilek Hakkani-Tür, Gokhan Tur, Xiang Li, Chengyuan Ma, Xing Fan IWSDS, 2026 arxiv SpeakRL is a reinforcement-learning framework that trains language models to proactively ask clarification questions during multi-turn, task-oriented dialogues by designing verifiable rewards and structured tokens for internal reasoning and clarification, leading to significant improvements in task completion without longer conversations. |

IWSDS 2026

|

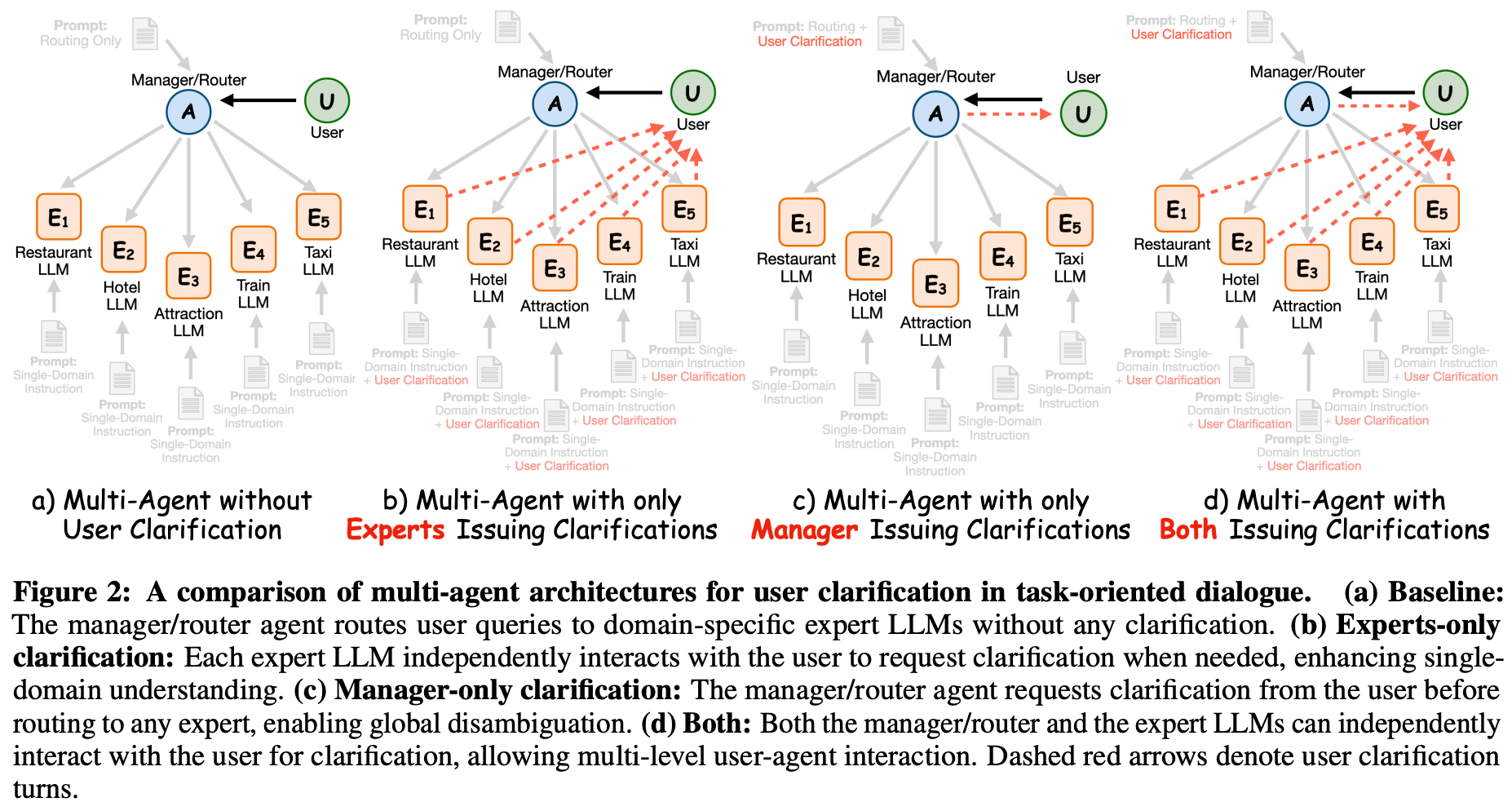

MAC: A Multi-Agent Framework for Interactive User Clarification in Multi-turn Conversations

Emre Can Acikgoz*, Jinoh Oh, Joo Hyuk Jeon, Jie Hao, Heng Ji, Dilek Hakkani-Tür, Gokhan Tur, Xiang Li, Chengyuan Ma, Xing Fan IWSDS, 2026 arxiv MAC is a multi-agent clarification system that strategically coordinates when and which agent should ask clarification questions to resolve ambiguous user requests in conversational dialogue, leading to higher task success and shorter dialogues by systematically managing ambiguity resolution across supervisor and expert roles. |

SIGDial 2025 (Oral)

|

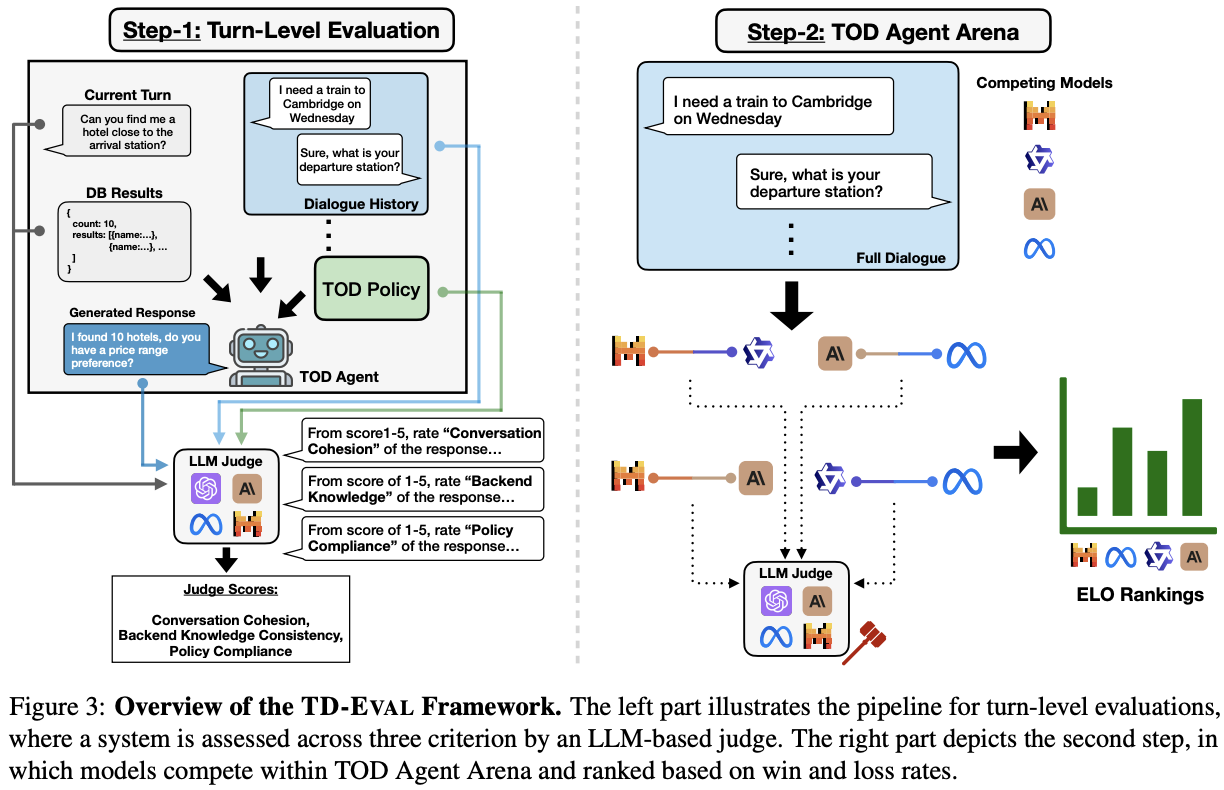

TD-EVAL: Revisiting Task-Oriented Dialogue Evaluation by Combining Turn-Level Precision with Dialogue-Level Comparisons

Emre Can Acikgoz*, Carl Guo*, Suvodip Dey*, Akul Datta, Takyoung Kim, Gokhan Tur, Dilek Hakkani-Tür SIGDial (Oral), 2025 arxiv / website / code We propose TD-EVAL, a two-step evaluation framework for TOD systems that combines fine-grained turn-level analysis—focusing on conversation cohesion, knowledge consistency, and policy compliance—with dialogue-level comparisons via a pairwise TOD Agent Arena. This unified approach captures both local and global errors missed by traditional metrics. |

NeurIPS 2025

|

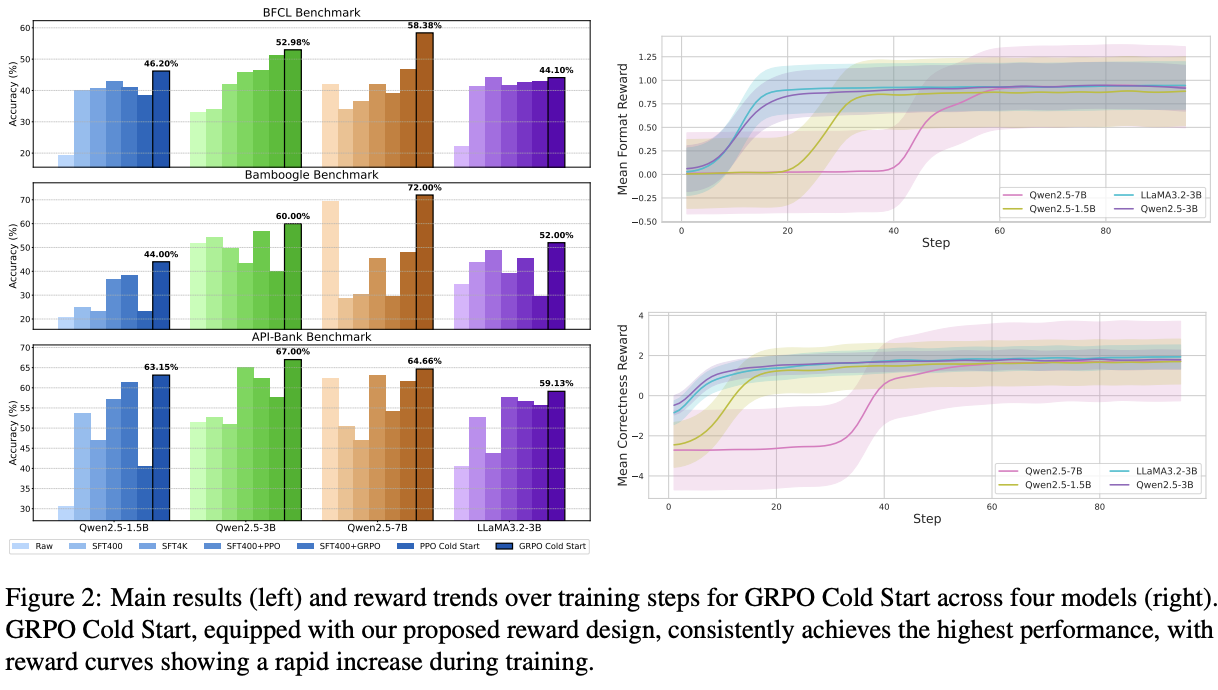

ToolRL: Reward is All Tool Learning Needs

Cheng Qian*, Emre Can Acikgoz*, Qi He, Hongru Wang, Xiusi Chen, Dilek Hakkani-Tür, Gokhan Tur, Heng Ji NeurIPS, 2025 arxiv / code / huggingface In ToolRL, we explored different reward strategies for these issues and present a new approach for tool utilization tasks that achieves 17% improvement over base models and 15% over SFT versions. |

|

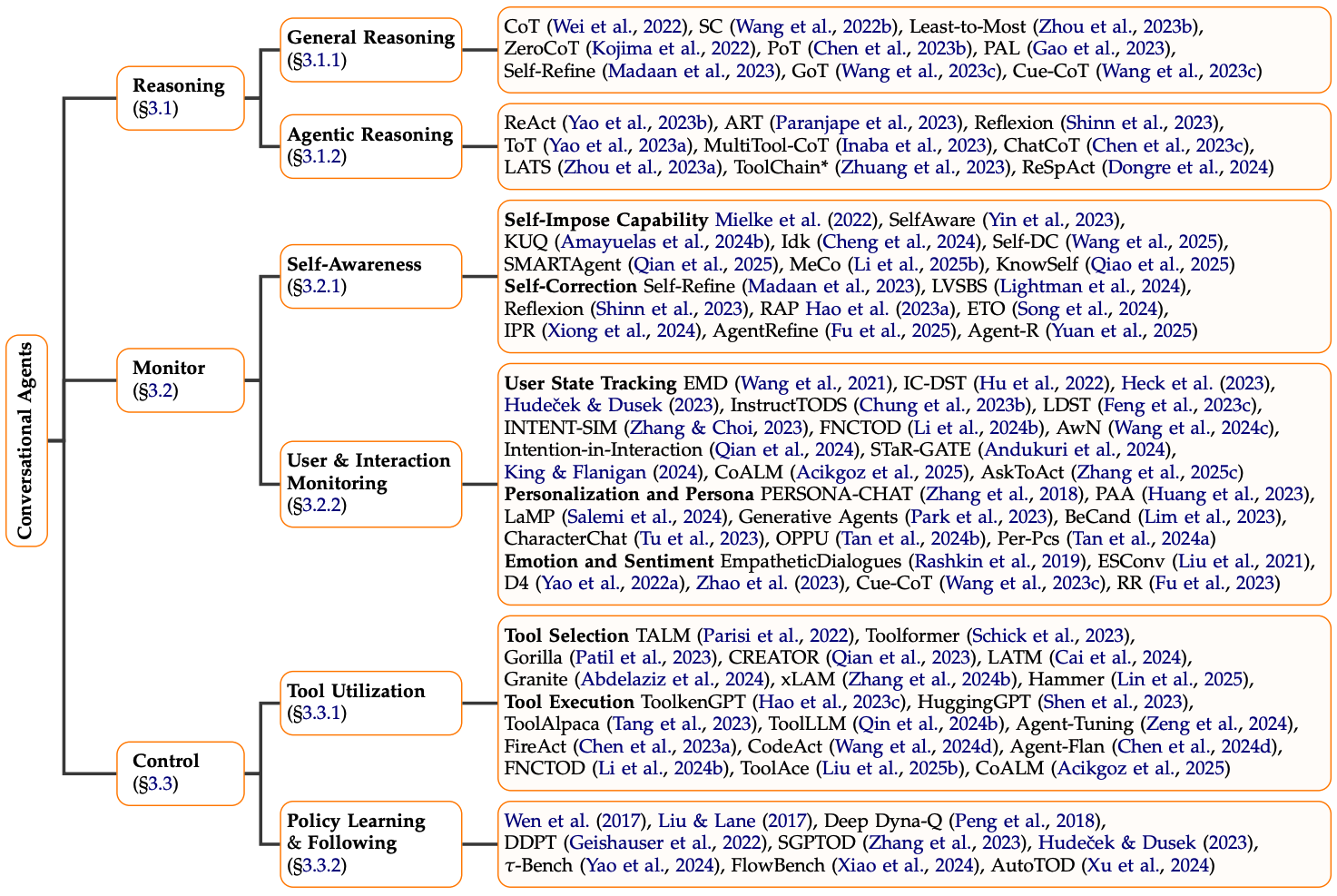

A Desideratum for Conversational Agents: Capabilities, Challenges, and Future Directions

Emre Can Acikgoz*, Cheng Qian*, Hongru Wang*, Vardhan Dongre, Xiusi Chen, Heng Ji, Dilek Hakkani-Tür, Gokhan Tur arXiv, 2025 arxiv / code Our proposed taxonomy systematically analyzes Conversational Agents around three essential dimensions: (i) Reasoning—logical and structured thinking for decision-making, (ii) Monitoring—self-awareness and continuous user intention tracking, (iii) Control—effective tool utilization and policy adherence, all together with the representative list of works. |

ACL 2025

|

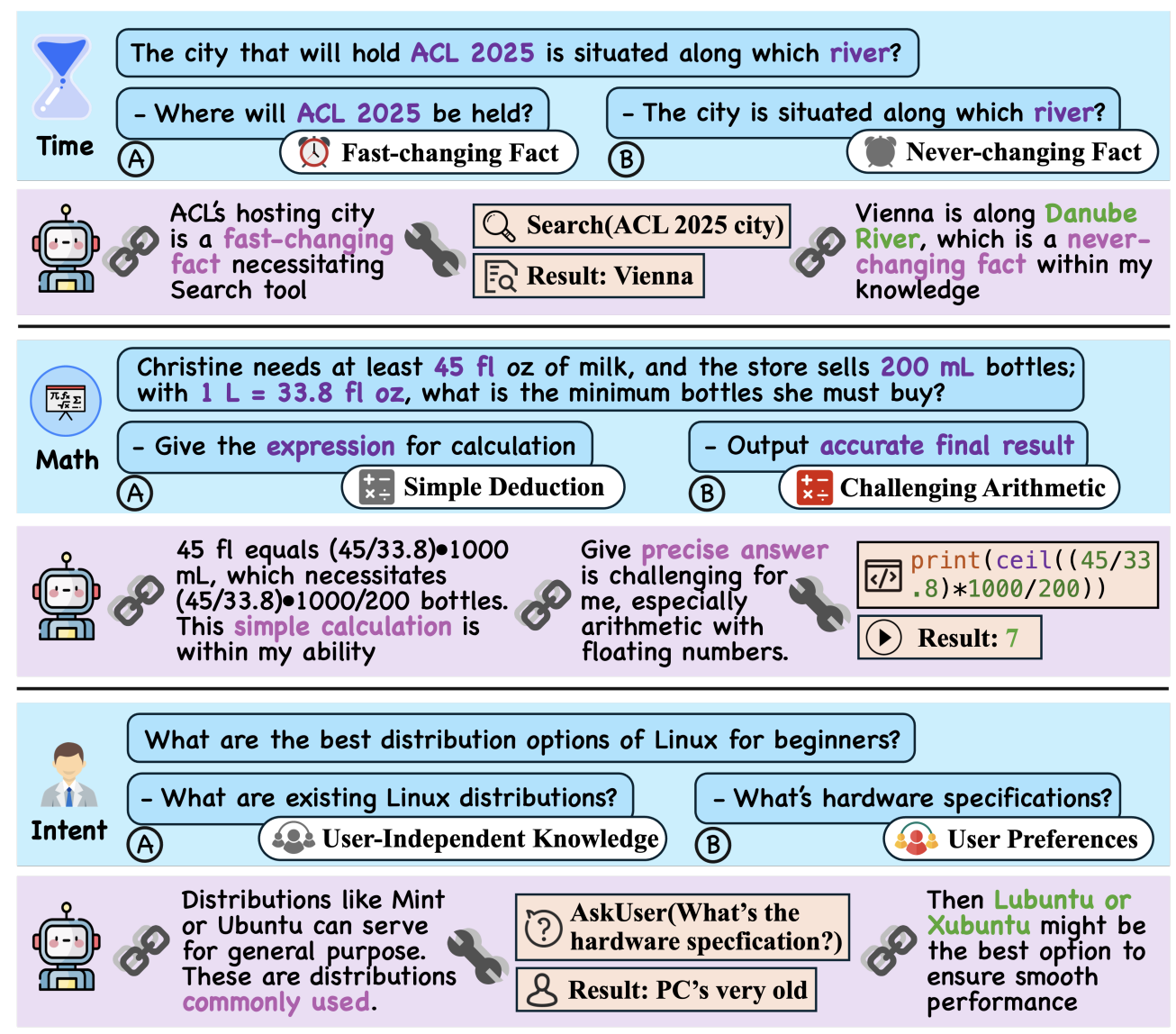

SMART: Self-Aware Agent for Tool Overuse Mitigation

Cheng Qian*, Emre Can Acikgoz*, Hongru Wang, Xiusi Chen, Avirup Sil, Dilek Hakkani-Tür, Gokhan Tur, Heng Ji Findings of ACL 2025 arxiv / code / huggingface Inspired by human metacognition, SMART enhances LLM's self-awareness to reduce tool overuse while boosting performance. Our experiments show that SMARTAgent reduces tool use by 24% while improving performance by 37%. |

ACL 2025

|

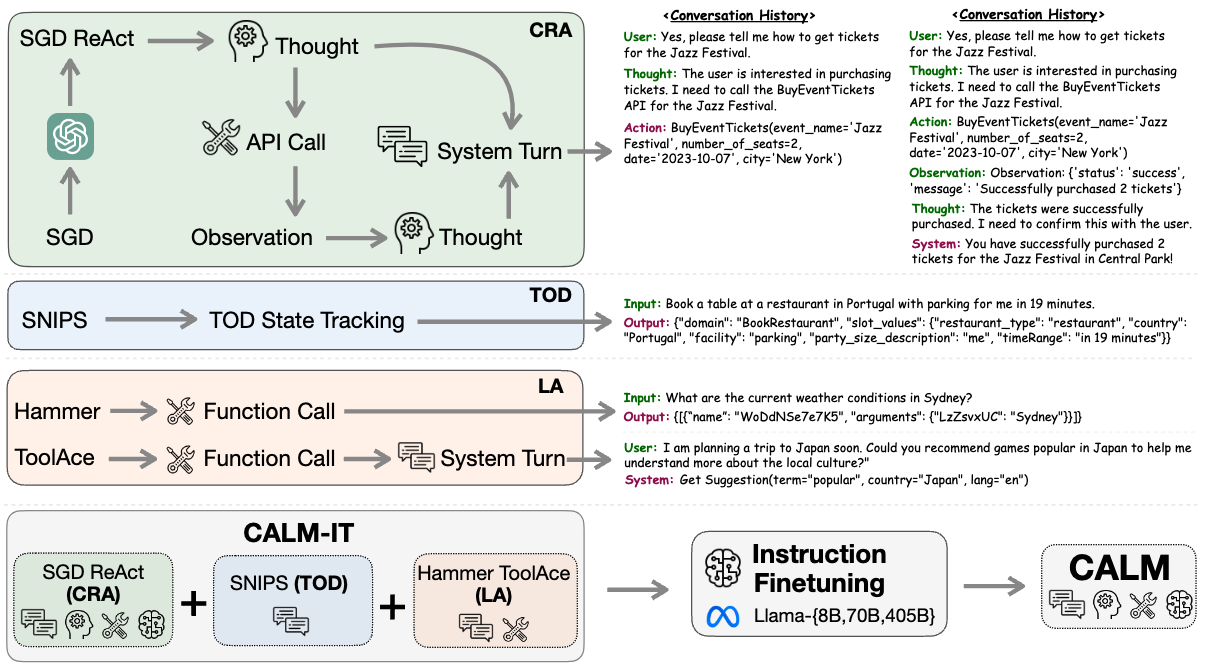

Can a Single Model Master Both Multi-turn Conversations and Tool Use? CoALM: A Unified Conversational Agentic Language Model

Emre Can Acikgoz, Jeremiah Greer, Akul Datta, Ze Yang, William Zeng, Oussama Elachqar, Emmanouil Koukoumidis, Gokhan Tur, Dilek Hakkani-Tür ACL 2025 Main arxiv / website / code CoALM unifies multi-turn dialogue management and complex API usage in a single model. Trained on the CoALM-IT multi-task dataset, CoALM (8B, 70B, 405B) outperforms domain-specific models like GPT-4o on MultiWOZ 2.4, BFCL V3, and API-Bank benchmarks. |

IEEE SPM 2025

|

Conversational Agents in the Era of Large Language Models [Perspectives]

Emre Can Acikgoz, Dilek Hakkani-Tür, Gokhan Tur IEEE SPM, 2025 IEEEXplore Large language models (LLMs) have driven a paradigm shift in task-oriented dialogue by enabling AI agents with stronger reasoning, tool use, and instruction-following abilities. These developments give rise to conversational AI agents—systems that merge advanced language understanding with agentic decision-making to achieve dynamic, context-aware, and task-oriented interactions. This work covers ongoing challenges include multi-turn context management, controllability, personalization, and user alignment. |

|

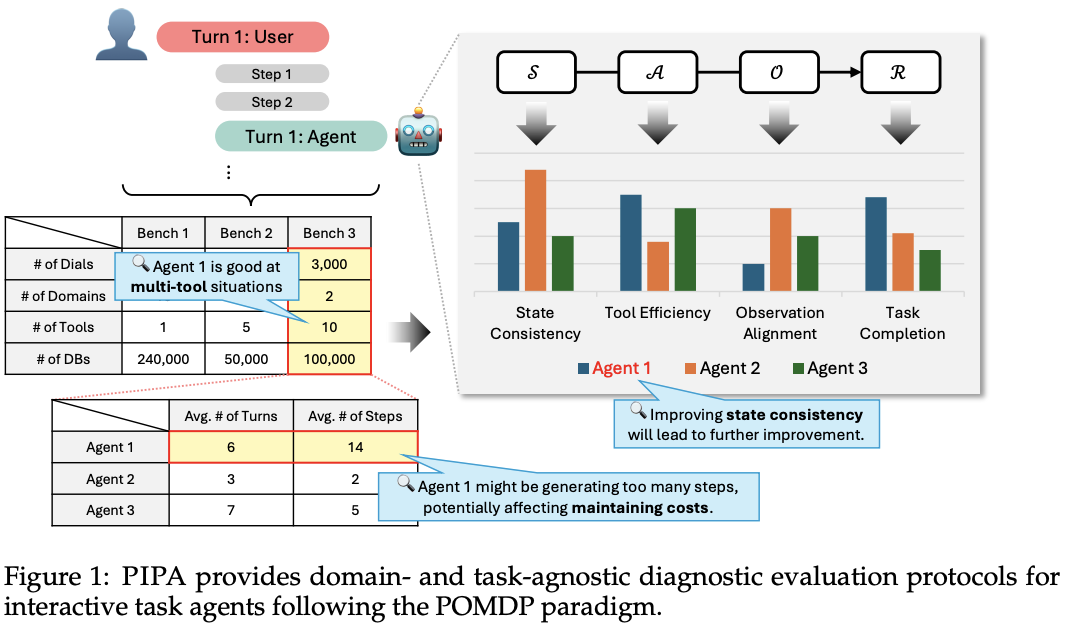

PIPA: A Unified Evaluation Protocol for Diagnosing Interactive Planning Agents

Takyoung Kim, Janvijay Singh, Shuhaib Mehri, Emre Can Acikgoz, Sagnik Mukherjee, Nimet Beyza Bozdag, Sumuk Shashidhar, Gokhan Tur, Dilek Hakkani-Tür arXiv, 2025 arxiv PIPA is a unified evaluation protocol for task planning agents that models their behavior within a POMDP framework, enabling fine-grained assessment across the entire agentic process. Unlike traditional task completion metrics, PIPA uses atomic evaluation criteria to diagnose strengths and weaknesses in context understanding, tool use, and decision-making, aligning evaluation more closely with user satisfaction. |

2024 |

IWSDS 2025

|

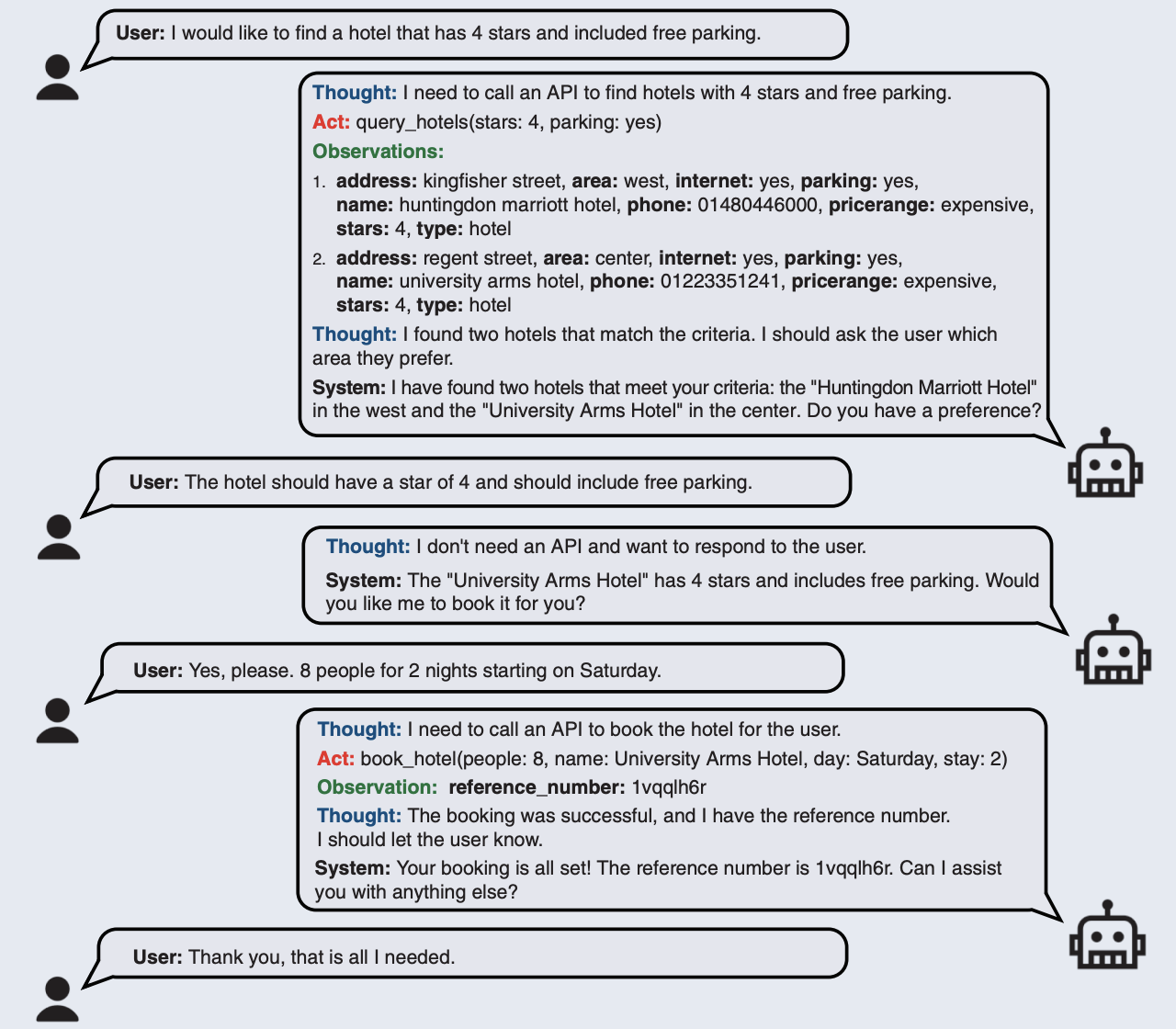

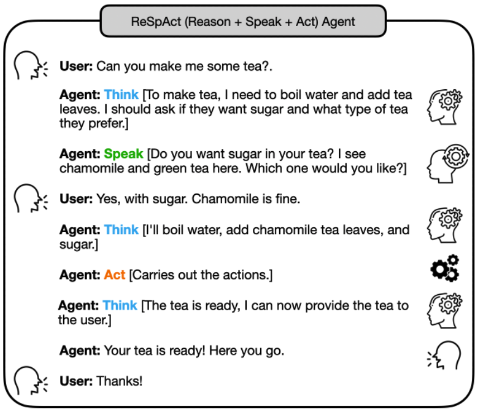

ReSpAct: Harmonizing Reasoning, Speaking, and Acting

Vardhan Dongre, Xiaocheng Yang, Emre Can Acikgoz, Suvodip Dey, Gokhan Tur, Dilek Hakkani-Tür IWSDS, 2025 arxiv / website / code ReSpAct is a framework that enables LLM agents to engage in interactive, user-aligned task-solving. It enhances agents' ability to clarify, adapt, and act on feedback. |

EMNLP MRL

|

Bridging the Bosphorus: Advancing Turkish Large Language Models through Strategies for Low-Resource Language Adaptation and Benchmarking

Emre Can Acikgoz, Mete Erdoğan, Deniz Yuret EMNLP MRL, 2024 arxiv / website / code / poster This study evaluates the effectiveness of training strategies for large language models in low-resource languages like Turkish, focusing on model adaptation, development, and fine-tuning to enhance reasoning skills and address challenges such as data scarcity and catastrophic forgetting. |

NeurIPS GenAI4Health (Oral)

|

Hippocrates: An Open-Source Framework for Advancing Large Language Models in Healthcare

Emre Can Acikgoz, Osman Batur İnce, Rayene Bech, Arda Anıl Boz, Ilker Kesen, Aykut Erdem, Erkut Erdem NeurIPS GenAI4Health (Oral), 2024 arxiv / website / poster We present Hippocrates, an open-source LLM framework specifically developed for the medical domain. Also, we introduce Hippo, a family of 7B models tailored for the medical domain, fine-tuned from Mistral and LLaMA2 through continual pre-training, instruction tuning, and reinforcement learning from human and AI feedback. |

ICLR 2024

|

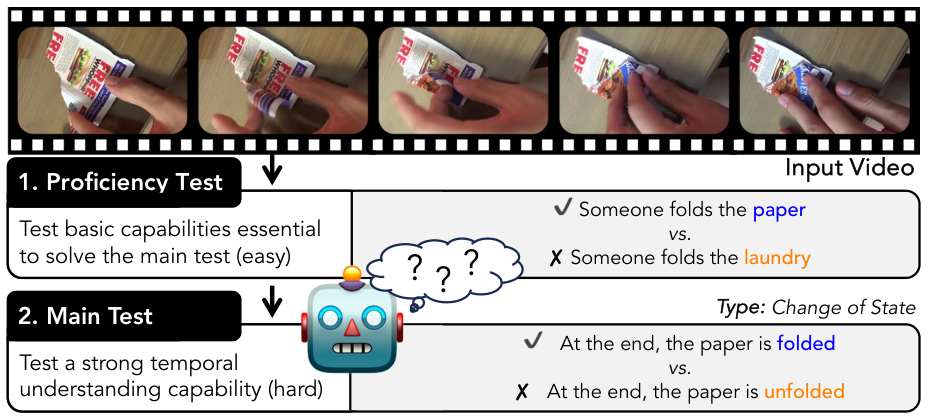

ViLMA: A Zero-Shot Benchmark for Linguistic and Temporal Grounding in Video-Language Models

Ilker Kesen, Andrea Pedrotti, Mustafa Dogan, Michele Cafagna, Emre Can Acikgoz, Letitia Parcalabescu, Iacer Calixto, Anette Frank, Albert Gatt, Aykut Erdem, Erkut Erdem ICLR, 2024 arxiv / website / code ViLMA (Video Language Model Assessment) presents a comprehensive benchmark for Video-Language Models, starting with a fundamental comprehension test and followed by a more advanced evaluation for temporal reasoning skills. |

2022 |

EMNLP MRL

|

Transformers on Multilingual Clause-Level Morphology

Emre Can Acikgoz, Tilek Chubakov, Müge Kural, Gözde Gül Şahin, Deniz Yuret EMNLP MRL, 2022 arxiv / code / slides This paper describes the winning approaches in MRL: The 1st Shared Task on Multilingual Clause-level Morphology. Our submission, which excelled in all three parts of the shared task — inflection, reinflection, and analysis — won the first prize in each category. |

Tutor

Comp547: Deep Unsupervised Learning (Spring'24) |

Blogs

The Rise of Conversational AI Agents with Large Language Models (November 8, 2024) |

Academic Service

Reviewer: AACL 2025, EMNLP 2025, NeurIPS 2025, ACL 2025, NeurIPS 2024 |

Two Truths and a Lie

Two truths and one fun fact about me: (i) I won national math olympiads twice, (ii) I made my own custom Harley Davidson when I was 16, (iii) I used to play college football. |

|

|