Large Language Models (LLMs) have brought significant advancements in Natural Language Processing, transforming our interactions with AI by enhancing understanding, reasoning, and task-solving. Traditionally, before generative LLMs, task-oriented dialogue (TOD) systems guided AI-based assistants to perform predefined tasks by following a structured flow. But with LLMs' ability to understand complex language, interpret instructions, and generate high-quality responses, we are moving towards a new breed of AI—Conversational AI Agents—that engage users in more dynamic, context-rich conversations. This brief blogpost scope Conversational Agents in the era of Large Language Models; clarifies key terminology, explores current challenges, and discusses future directions in this domain.

LLM-oriented Domain Shift

The backbone of the LLM-based domain shift in language understanding and generation tasks started it first steps with the large-scale self-supervision trend, where LLMs are trained on vast datasets. Paired with advances in GPU technology, this approach has empowered LLMs to handle progressively larger models and datasets. However, LLMs were initially pre-trained only on sentence completion idea, which limits their ability to follow complex human instructions. This led to instruction tuning, where models are fine-tuned specifically to understand and follow instructions. This process, enhanced with human feedback to calibrate LLM responses with user preferences, like a cherry on the top. Parallel to these parameter-update strategies, in-context learning emerged with GPT-3 (Brown et al, 2020), enabling LLMs to learn from a few examples provided within the prompt. Further methods like Chain-of-Thought (CoT) (Wei et al., 2022)prompting let LLMs to tackle complex queries by breaking them down into smaller subtask for step-by-step reasoning.

Despite these, when interacting with users, LLMs remain fundamentally limited to their parametric knowledge during response generation, lacking emergent agent abilities like reasoning, planning, decision-making, and acting.

Conversational AI Agents

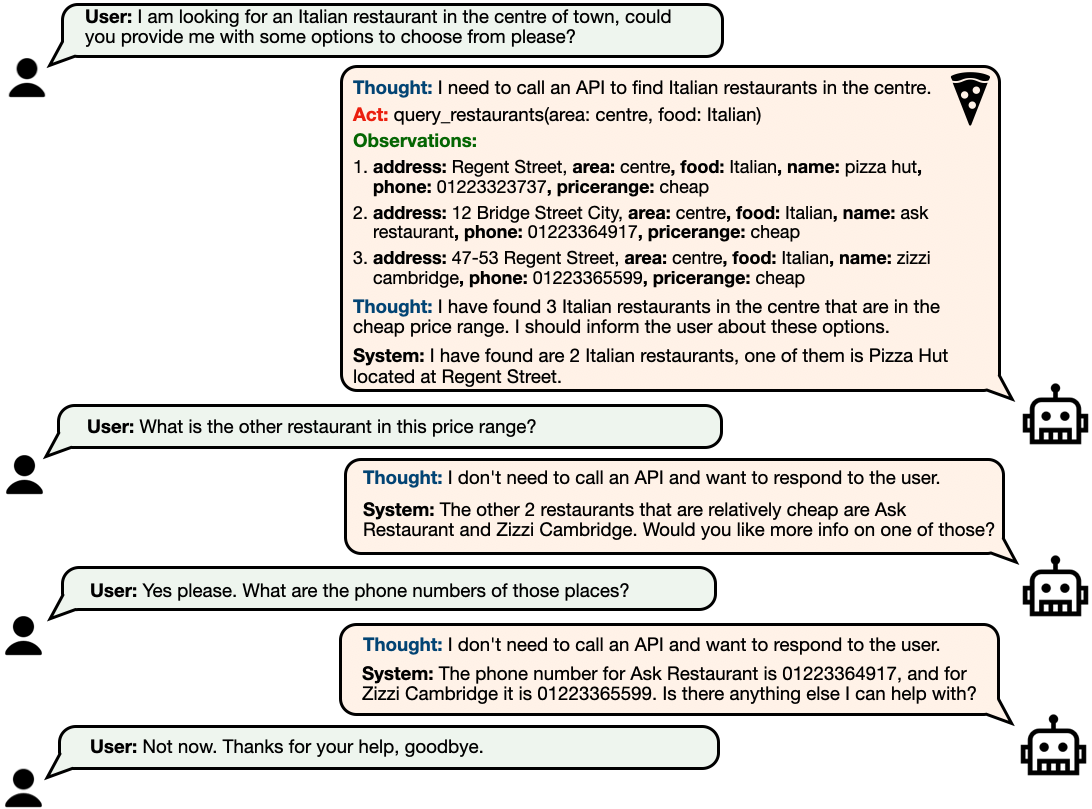

In this blogpost, we explicitly want to motivate the emergent field of Conversational Agent, where an LLM-based agent designed to perform multi-turn interactions with users, by integrating reasoning and planning capabilities with action execution.

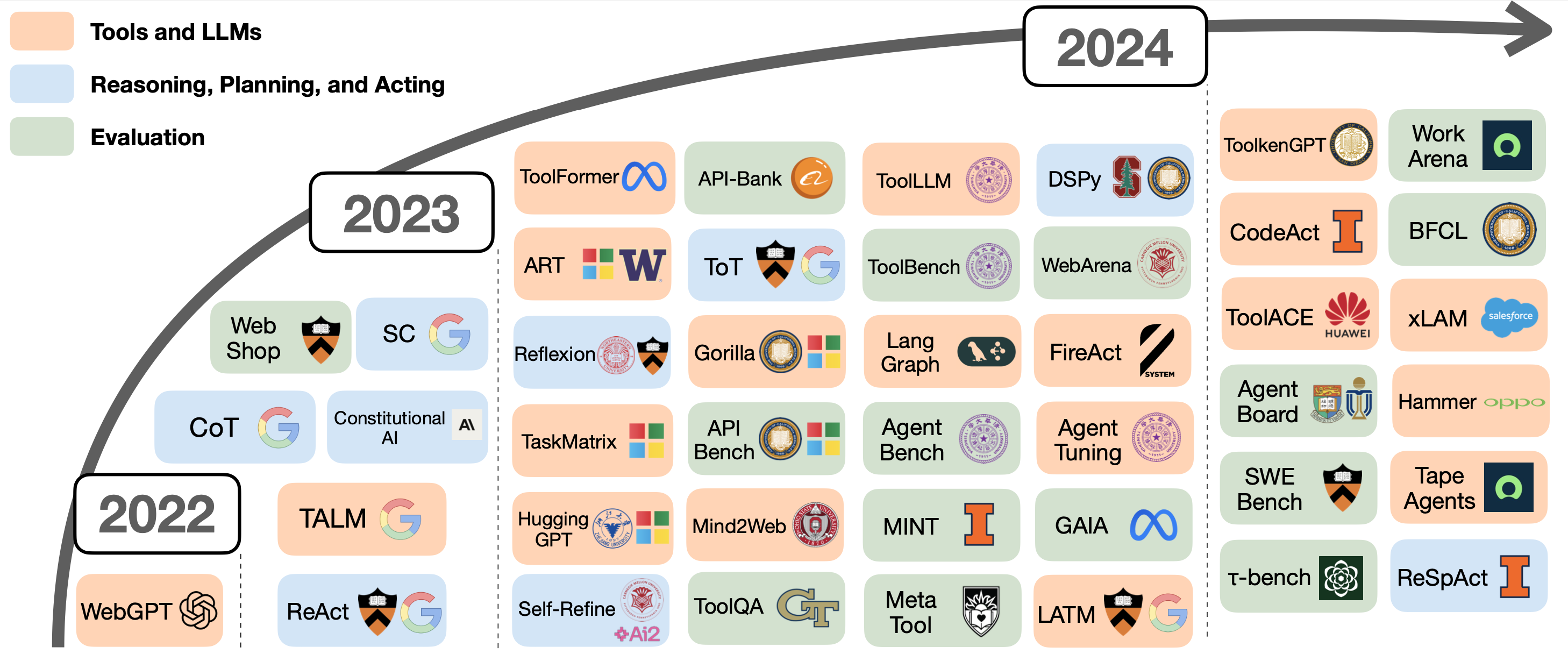

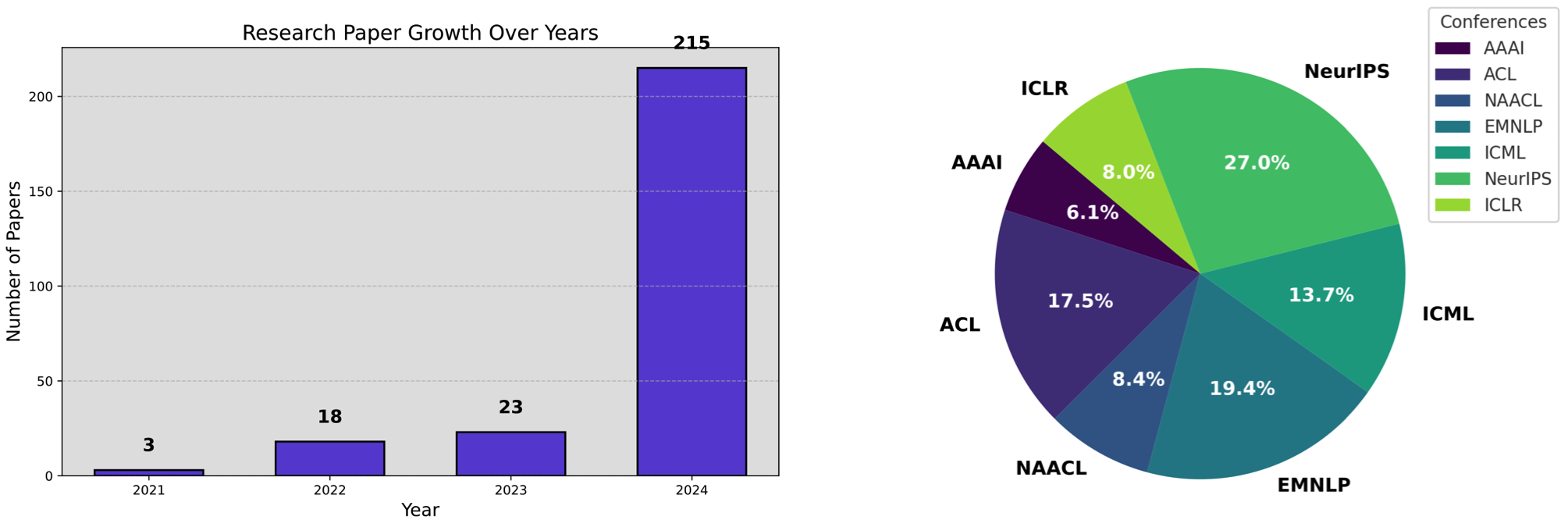

We categorize the recent developments on LLM Agents into three different categories: (i) tool-usage, (ii) thinking, planning, and acting, and (iii) Evaluation.

Tools Usage. While LLMs are good at solving language tasks with their own reasoning (CITE CoT), they struggle with real-time tasks that requires perfoming actions like checking live weather or executing specific commands. Integrating tools like APIs can help LLMs perform these tasks by letting them call functions directly CITE(OpenAI Function Calling). Studies such as ToolFormer (Shick et al., 2023), Gorilla (Patil et al, 2023), and ToolLLM (Qin et al., 2023) have shown that enabling LLMs to use tools that improves their ability to complete tasks.

Reasoning, Planning, and Acting. Adding tools helps, but LLMs still need the skill to think through tasks and decide on when and how to call theright actions. Focusing that ReAct (Yao et al., 2022) let these LLM agents to think and act by integrating thought with execution for effective outcomes. Proprietary models like GPT-4 are ahead in these agent skills, but methods like AgentTuning (Zeng et al., 2023) and FireAct (Chen et al., 2023) work on training open-source models for agent thinking and acting. On the other hand, agents sometimes follow planned steps to reach goals, from moving physically such as Helper-X (Sarch et al., 2024) and CodeAct (Wang et al., 2024) to online navigation like Webarena (Zhou et al., 2023), sharing progress with users for real-time feedback and smoother interactions.

Evaluation. Agent evaluation has evolved from checking basic commands (Tur et al., 2011) to more advanced tests, like tracking multi-turn dialogue (Rastogi et al., 2019) and completing complex tasks in real time (Hudecek et al., 2023). New benchmarks like AgentBench (Liu et al., 2023) and GAIA (Mialon et al., 2023) assess agents on tougher challenges, including maintaining consistency over multiple interactions and adapting to changing environments. Recent work, like $\tau$-bench (Yao et al., 2024) and TravelPlanner (Xie et al., 2024), pushes the frontier by measuring both interactive consistency and environmental adaptability in real-world scenarios.

Next-Generation Conversational Task-Completion Agents

Beside general Agent domain, LLMs have reshaped dialogue systems by transitioning from rigid, modular architectures (Young et al., 2002) to adaptive, agent-based frameworks that can handle complex and multi-turn interactions with refined prompting and fine-tuning methods (Gupta et al., 2022). Rest, we will investigate the urgent required capabilities of Conversational Agents for better system towards AGI.

Memory and Personalization. Effective Conversational Agents integrate short- and long-term memory (Huang et al., 2023) which enables them to create personalized interactions that recall user preferences. This intergration fosters a natural connection, such as remembering a favorite coffee order or special days like birthday or valetines day (Park et al., 2023).

Policy Alignment and Control. Traditional dialogue systems use structured, rule-based policies to ensure controlled responses. However, in modern LLM-based frameworks like LangGraph and DSPy, they provide emerging solutions for approximate policy control but remain limited in complex scenarios.

Interactivity. Recent advances, like ReSpAct (Vardhan et al., 2023), advocate for user-guided interactions to clarify and adapt agent behavior in real-time, addressing ambiguities and obstacles for more natural and controllable dialogues.

Multi-Agent Collaboration. Finally, multi-agent frameworks like AutoGen (Wu et al., 2023) allow specialized agents to collaborate, with a central "concierge" agent orchestrating tasks. This colloborative interaction between different agetns can enhance accuracy and user experience in complex settings such as customer service .

Challenges and Future Directions

Conversational AI Agents have made remarkable progress but challenges remain. Ensuring controllability, managing context, and avoiding hallucinations (where the agent generates inaccurate responses) are interesting areas for improvement. Agents will also need personalized interactions, where memory-based systems track user preferences for more tailored responses, enhancing trust and user satisfaction.

Looking forward, this shift presents both exciting opportunities and important challenges. While significant progress has been made in agentic features like memory, policy control, and collaboration, there remains much to explore in creating intelligent, adaptive, and reliable agents, towards Autonomous General Intelligence (AGI).

Citation

Cited as:

Acikgoz, Emre Can. (Nov 2024). The Rise of Conversational AI Agents with Large Language Models. https://emrecanacikgoz.github.io/Conversational-Agents/.

Or

@article{acikgoz2024agents,

title = "The Rise of Conversational AI Agents with Large Language Models",

author = "Emre Can Acikgoz and Dilek Hakkani-Tur and Gokhan Tur",

journal = "emrecanacikgoz.github.io",

year = "2024",

month = "November",

url = "https://emrecanacikgoz.github.io/Conversational-Agents/"

}

Useful Resources

References

[4] Ye et al. “In-Context Instruction Learning.” arXiv preprint arXiv:2302.14691 (2023).

[7] Wei et al. “Chain of thought prompting elicits reasoning in large language models.” NeurIPS 2022

[8] Wang et al. “Self-Consistency Improves Chain of Thought Reasoning in Language Models.” ICLR 2023.

[14] Yao et al. “ReAct: Synergizing reasoning and acting in language models.” ICLR 2023.

[23] Parisi et al. “TALM: Tool Augmented Language Models” arXiv preprint arXiv:2205.12255 (2022).

[24] Schick et al. “Toolformer: Language Models Can Teach Themselves to Use Tools.” arXiv preprint arXiv:2302.04761 (2023).

[26] Yao et al. “Tree of Thoughts: Deliberate Problem Solving with Large Language Models.” arXiv preprint arXiv:2305.10601 (2023).